Digg recently introduced a feature that frames the content posted to Digg, reminding me of the internet in the 90′s, where framing other people’s content to put ads on top of (remember about.com’s ubiquitous frames?) was commonplace and when one of the first bits of JavaScript I used was a “frame buster” that would prevent those obnoxious banners above my web pages.

But I’m not writing this to rip on Digg. Their “Diggbar” is a good example of why the old “banner in a frame” tactic began to die off and why things like the Diggbar can succeed.

As in any such case where you are breaking the fundamental way people expect the internet to work (in this case by framing the destination url) the tradeoff between usefulness and annoyance is what will make or break this feature and Digg has some useful (for some) features included like:

- URL shortening. Twitter’s character limit has sparked a renaissance in short url services, and Digg made it easy to create them by appening any url to digg.com/ (e.g. digg.com/http://agilewebmasters.com/robert/diggbar-feedback/).

- Access to comments and related submissions. In my experience with Digg the top ranked comments often provide useful mirrors, context or even refutation. Some will find their inclusion in the Diggbar useful in this format. As to related submissions once they manage to make this useful (they are surprisingly bad at term extraction, but I’ll write about this some other day) this too can serve as an additional exploration point for their users.

- Digg/Bury. Providing access to their users to digg and bury articles while reading the actual article in a frame is a good thing. Their users don’t need to vote on an article grid or go to the comments page and can go to a single destination for both content and the core digg functions.

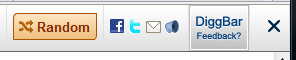

- Random. This opens up an entirely new use pattern for digg bringing the serendipity of StumbleUpon and allowing for even more casual content discovery. This is by far the most game-changing part of the Diggbar, and in my opinion this should be reflected in the interface UI by giving it more prominence on the toolbar. Personally, I’d put it where they have their logo right now and incorporate the logo into the random button (call it “digg shuffle” and you are just a lower-case “i” away from the hearts and minds of a sizable cult following among their user base).

But I most likely won’t be using this feature (call me old fashioned but I like the traditionalist notion that links to another site are best served by an actual link to the other site, not a link to your own site with a frame, and yes I am inordinately parenthetical) and digg had the good sense to include an easy way to opt out of this permanently instead of only providing the traditional “remove this frame” option of the 90′s. Without this option I’d likely not use digg anymore. Hell, the fact that reddit provides a direct link to the submission url in their rss feeds is one of the biggest reasons I’ve seen my time on reddit increase and my time on digg decrease over time. For many reasons I like less, not more, between me and the content I’m trying to see.

But now that I got that convoluted rambling out of the way, I’ll get to what prompted me to write up a post in the first place, and that is something digg got fundamentally wrong.

- Despite my reservations, I wanted to try the casual content discovery that is random browsing. I clicked on the Diggbar’s “random” button a few times before a solicitation for feedback pushed the buttons over and caused my next click to launch a popup to twitter.

That brought my foray down digg’s random path to an abrupt end, and if they are introducing a “toolbar” that they hope will drive more such use they should take greater care to make UI elements predictably positioned, especially if it’s a button whose typical use pattern involved frequent clicks (I note with great humility that my brilliant “digg shuffle” idea would have precluded this).

- They are soliciting feedback through SurveyMonkey.com, and I’d already provided feedback. So far so good but now I discover that this link leads me to a page saying “Thanks for Taking the Survey. Looking to Create Your Own?” and does not allow me to provide any additional feedback.This is daft for several reasons. An obvious reason to start with is for continuing to solicit feedback when I can no longer provide it but that doesn’t even begin to address the more fundamental mistake of using such a third party provider that can’t handle the simple use case of a user having feedback and then, as in my example of seeing additional behavior after the first feedback was provided, having more. It’s not much more difficult to roll their own simple and scalable solution for feedback through Google App Engine or Amazon Simple DB than it is to use a third party with such limits on their users’ experience and that is the solution I’d elect in their shoes, but even if they wanted to eat someone else’s dog food they could have at least provided a more useful forum such as Google Moderator or Get Satisfaction that would have allowed for more than one instance of feedback (though given the open communication they might be more transparent than digg prefers for their initial feedback).As it stands, I had feedback that they’ll have to happen upon on this blog post to read (8-ball says “Outlook not so good” and tossed in “nobody reads this blog anyway”), and that their medium could not collect. There are more elegant solutions to prevent sock puppetry than such a limitation and if I were running a site digg’s size I’d want to get the basics right. And to be fair, if they were in my shoes, they’d probably be headed to bed right now instead of waxing loquacious on such usability inanities at this hour.

Google has

Google has